Schema formation in synaesthesia

The following is an extract (just the text, not the figures) from a paper I wrote for the proceedings of the V

International Conference Synesthesia: Science and Art. Alcalà la Real de Jaén. España. 16–19th May

2015.

The following is an extract (just the text, not the figures) from a paper I wrote for the proceedings of the V

International Conference Synesthesia: Science and Art. Alcalà la Real de Jaén. España. 16–19th May

2015.

Many of the ideas were also developed in a paper with my colleague Fiona Newell, on Multisensory Integration and Cross-Modal Learning in Synaesthesia: a Unifying Model.

Abstract

Psychologists use the term “schema” to

refer to the information or knowledge that makes up our concept of an object. It

includes all the attributes that the object has, such as the shapes of a letter

and the sounds it can make, the shape of a numeral and the value it represents,

or the face of a person and their name and everything you know about them. In

many cases, those attributes are represented across very different brain areas

(such as those conveying visual or auditory information, for example). With

experience, the representations of the different attributes of an object become

linked together, in the mind, by repeated co-occurrence.

At the level of the brain, this must

involve some kind of strengthening of connections across areas of the cerebral

cortex so that a pattern of activity in one area (say that induced by the sight

of the letter “A”) reliably co-activates or primes a particular pattern of

activity in another area (say the sounds of the letter “A”). The brain is wired

to enable this kind of communication between different areas, so that these

sorts of associations can be learned by repeated exposure to contingent stimuli,

for example as we learn the alphabet or our numbers.

Synaesthesia is characterised by the

incorporation of additional attributes into the schema of an object – ones not

reflecting the characteristics of the object itself but some internal

associations triggered by it. I propose a model to account for synaesthesia

based on innate differences in wiring between cortical areas, which lead to

additional percepts (such as colours) being triggered by an object during

learning. These repeated patterns eventually result in stable synaesthetic

associations, despite the lack of reinforcement from the external world. This

model can account for the heritability of the condition and evidence of

cortical hyperconnectivity, but also for the learned nature of many of the

inducing stimuli and observed trends in letter-colour or word-taste pairings.

In this conceptual framework, a natural

contrast emerges between synaesthesia, on the one hand, and a number of other conditions,

collectively known as “agnosias”, on the other. These include dyslexia and

dyscalculia, face blindness, tone deafness, colour agnosia and others. These

conditions seem to reflect an inability to incorporate all the attributes of an

object into a schema, resulting in a “lack of knowledge” of particular types of

objects. There is evidence that these result from decreased connectivity

between brain regions. It is hoped that the study of synaesthesia may also

inform on the mechanisms underlying these less benign conditions.

Introduction

Synaesthesia is often

described as a cross-sensory phenomenon, where, for example, particular sounds

(such as words or musical notes) will induce a secondary percept (such as a

color or taste), which is specific for each stimulus (Bargary and Mitchell, 2008; Hubbard and Ramachandran, 2005). While these florid types of synaesthesia

involve very vivid perceptual experiences, the more common manifestation is

associative (Simner, 2012). These cases involve the certain knowledge

that some object, such as a letter or number, has, in addition to its normal

attributes (shape, sound, value, etc.), some extra traits associated with it,

such as spatial position, color, texture, even gender and personality. These associated characteristics are stable,

idiosyncratic and have typically formed an intrinsic part of the person’s

schema of that object for as long as they can remember.

A recurrent question in relation to

synaesthesia is whether it represents a truly distinct phenomenon,

qualitatively different from typical perception, or reflects instead an

amplification or exaggeration of normal processes of multisensory integration (Deroy and Spence, 2013b; Ward et al., 2006). A

related question concerns the extent to which particular synaesthetic

associations arise arbitrarily through intrinsic neural mechanisms or are

driven instead by experience and learning in ways that may be common to all

people (Watson et al., 2014). Both these questions bear on what is arguably the central question

in the field: why do some people develop synaesthesia while most do not? The

answers to these questions thus determine fundamentally how we conceive of

synaesthesia.

On the one hand, synaesthesia represents a

dichotomous phenotype – people are relatively easily categorised as

synaesthetes or non-synaesthetes. Moreover, the condition is clearly genetic in

origin, often running in families with a Mendelian pattern of inheritance (some

members clearly having the condition, others clearly not) (Asher et al., 2009; Barnett et al., 2008; Baron-Cohen et al., 1996; Galton, 1883; Rich et al., 2005; Ward and Simner,

2005). The

primary answer to the question of why some people develop synaesthesia is therefore

that they inherit a genetic variant that strongly predisposes to the condition.

This argues for some intrinsic difference as a necessary starting point in

explaining the condition and against a model where general processes are

sufficient to explain it.

On the other hand, a number of lines of

evidence suggest that whatever is happening in synaesthesia, it relies on or at

least interacts with processes of multisensory integration that are common

across all people. These include both acute cross-sensory activation as well as

longer-term cross-modal learning.

First, there is strong evidence that most

areas of what has been deemed unisensory cortex are in fact essentially

multisensory, with extensive anatomical cross-connectivity and at least some

modulatory inputs from other modalities providing credible substrates for

cross-sensory interactions (Ghazanfar and Schroeder, 2006; Qin and Yu, 2013). The

idea that such interactions may be always present but not always consciously

accessible is reinforced by the activation of visual areas in blind or

blindfolded people (Bavelier and Neville, 2002) and by the phantasmagoric audiovisual synaesthetic experiences associated

with certain hallucinogens, such as lysergic acid (LSD), psilocybin or

mescaline (Schmid et al., 2014; Sinke et al., 2012). Such

experiences indicate that the barriers between the senses are certainly not as

rigid as typical experience suggests.

However, drug-induced synaesthetic

experiences have quite a different phenomenology, tending to involve florid,

detailed and complex visual experiences induced by sound, especially music (Deroy and Spence, 2013b; Sinke et al., 2012). By

contrast, developmental synaesthesia is characterised by more stable and sedate

cross-sensory pairings of particular stimuli with particular additional

percepts or conceptual attributes. These tend to be quite simple in nature,

involving perceptual primitives rather than complex forms. The relevance of

drug-induced synaesthesia to the mechanisms underlying developmental forms thus

remains unproven.

There is another line of evidence, however,

which supports the idea that normal multisensory integration processes are

involved in synaesthesia. In particular, these are processes involved in

categorical perception, which integrate information about objects across

sensory domains and generate conceptual and supramodal representations.

For any form of synaesthesia, the

particular pairings that emerge between inducers and concurrents are

idiosyncratic and tend to be dominated by apparent arbitrariness in any

individual. However, by looking across many synaesthetes, it is possible to

discern clear trends in such pairings, for example between particular letters

and their synaesthetic colours. In English speakers, the letter B may be more

commonly blue than other colours (perhaps 30% of the time) and the letter Y

more commonly yellow (as high as 50% of the time) (Barnett et al., 2008; Rich et al., 2005). It

is even apparent that, for some synaesthetes, all of their colour-letter

pairings are derived from experience with childhood toys, such as refrigerator

magnets (Witthoft and Winawer, 2006). Similarly, for many synaesthetes with number forms, the numbers 1

to 12 are arranged in a circle like a clock face (Galton, 1883). Many word-taste pairings can also be explained by semantic

associations, such as “Cincinnati” tasting of cinnamon and “Barbara” tasting of

rhubarb (Simner, 2007).

There are thus clear cultural and semantic

influences on the particular pairings that emerge in developmental

synaesthesia. Some theorists have argued that such trends demonstrate that

synaesthesia is induced by learning and, even further, that the purpose of synaesthesia is to aid in

learning the inducing categories (Asano and Yokosawa, 2013; Mroczko-Wasowicz and

Nikolic, 2014; Watson et al., 2012; Yon and Press, 2014).

How can the idea that synaesthesia reflects

innate, genetic differences be reconciled with models that suggest it is driven

by learning? Here I develop a theoretical framework showing that these two

models are quite compatible (previously sketched out in (Mitchell, 2013)). I argue: (i) that the predisposition to develop synaesthesia at

all is genetic and innate; (ii) that the particular form and the pairings that

emerge are driven largely by idiosyncratic connectivity differences; but (iii)

that because the processes through which such pairings consolidate over time

involve normal mechanisms of multisensory learning and categorical perception,

the outcome can also be influenced by experience.

Synaesthesia

as an associative phenomenon

Developmental synaesthesia can be present

in diverse forms and experienced in qualitatively distinct ways. One important

distinction is between synaesthetes who are “projectors” and those who are

“associators”. For the former, the concurrent percept is actively and vividly

perceived, either out in the world or “in the mind’s eye”, while for the latter

it is merely conceptually activated in the way that saying the word “banana”

activates the concept of yellow, possibly even prompting a visual image of the

object in that colour, but is unlikely to induce a veridical percept of yellow

out in the world.

There is another important phenomenological

distinction between lower-level, truly cross-sensory synaesthesia, and

higher-level, more conceptual forms. In the former, taking coloured hearing as

an example, any sound may induce a visual percept, whether the person has ever

heard it before or not. The same sound will tend to induce the same visual

percept, but this does not seem to require prior experience.

For many associative forms, however, the

synaesthetic associations arise only with a particular set of stimuli.

Crucially, these are almost exclusively stimuli that are (i) categorical, and

(ii) learned (often over-learned), such as letters, numbers, days of the week,

months of the year, musical notes, words, etc. The emergence of these

associative forms of synaesthesia must thus necessarily involve learning at some

level, and indeed clearly interacts with normal processes through which the multisensory

attributes of objects are learned.

Cross-modal

learning and categorical perception

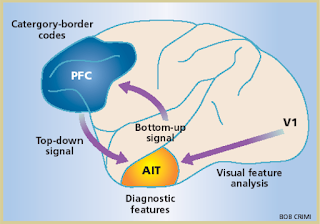

As we develop perceptual expertise, we come

to categorise objects into types and to recognise particular instances as

tokens of such types (Binder and Desai, 2011; Kourtzi and Connor,

2011).

Moreover, for any particular object, we develop a conceptual framework that

incorporates its many properties – a schema linking its various attributes.

Thus, the concept of a banana includes its typical shape, colour, taste, and

other semantic associations. While such representations incorporate attributes

from multiple sensory domains, they are essentially conceptual and supramodal.

There is good evidence that such supramodal representations involve activity in

specific associative areas of the brain, located in anterior inferotemporal

cortex (AIT), which can be thought of as “knowledge areas” (Chiou et al., 2014; Kourtzi and Connor,

2011).

As we develop perceptual expertise, we come

to categorise objects into types and to recognise particular instances as

tokens of such types (Binder and Desai, 2011; Kourtzi and Connor,

2011).

Moreover, for any particular object, we develop a conceptual framework that

incorporates its many properties – a schema linking its various attributes.

Thus, the concept of a banana includes its typical shape, colour, taste, and

other semantic associations. While such representations incorporate attributes

from multiple sensory domains, they are essentially conceptual and supramodal.

There is good evidence that such supramodal representations involve activity in

specific associative areas of the brain, located in anterior inferotemporal

cortex (AIT), which can be thought of as “knowledge areas” (Chiou et al., 2014; Kourtzi and Connor,

2011).

Even for recent inventions like written

alphabets, these areas tend to develop in the same positions across people (Dehaene and Cohen, 2007). This suggests that the specialisation of cortical areas for

particular classes of objects relies on an evolutionarily programmed pattern of

connectivity that places them at a convergence point of multiple, parallel

hierarchies, enabling them to integrate information across the relevant sensory

modalities.

Such areas are differentially activated by

tasks that tap into semantic knowledge and lesions or temporary inactivation of

these areas can result in object agnosias (De Renzi, 2000) – the inability to access all the attributes of an object when the

concept is activated (Chiou et al., 2014; Kourtzi and Connor,

2011; Mitchell, 2011). For

example, prosopagnosia, or face blindness, represents an inability to recognise

people’s identity from their faces, though other aspects of face processing may

remain intact. Similarly, colour agnosia refers to the inability to link

characteristic colours into the schemas of objects, despite normal colour

perception and discrimination.

Both these conditions can be caused by

injuries to associative areas, but, intriguingly, both can also be innate and

inherited (Behrmann and Avidan, 2005; Duchaine et al., 2007; Nijboer et al., 2007; van Zandvoort et al., 2007). The

fact that such conditions can be genetic highlights the fact that differences

in brain wiring (either structural or functional) can impact, very selectively,

on higher-order conceptual processes, presumably by altering the anatomical

substrates through which perceptual information is processed (Mitchell, 2011). Indeed, structural and functional neuroimaging studies have

highlighted reduced connectivity within extended networks of cortical areas in

these conditions. By contrast, synaesthesia may be caused by hyperconnectivity,

linking additional areas into the conceptual schemas of learned categories of

objects (Mitchell, 2011).

The processes by which such schemas emerge

can be illustrated by considering how letters are learned. As children are

learning to read they must learn to recognise and distinguish the various

graphemes of the alphabet and also link them to the appropriate phonemes of

their native language (Blomert and Froyen, 2010). In the visual domain, recognising graphemes requires, firstly,

extraction of increasingly complex visual features across the hierarchy of

areas in the ventral visual stream (Kravitz et al., 2013). Though it is a simplification, it is roughly true that each level in

the visual hierarchy extracts more complex features by integrating inputs from

multiple neurons at the level below, eventually enabling representation of

shapes and objects across the visual field.

Areas that are higher still become

specialised for processing specific types of visual information that correspond

to various categories (letters, faces, objects, scenes). This kind of

categorical perception enables the recognition of various instances of an

object – different sizes, views or versions (such as of the letter “A” (A,

A, A, a)) – all of which can activate the representation of the concept of

the object (Kravitz et al., 2013). Similar processes arise as children learn spoken language – they

develop expertise in recognising the typical speech sounds of their native

language, but become deficient in distinguishing between uncommonly used

phonemes.

Neuronal networks in general can learn in

the following fashion. Any given stimulus will activate a distinct subset of

neurons across an area of cortex, which can be thought of, reasonably

accurately, as a two-dimensional sheet of highly interconnected cells. Due to

their coincident activation, the connections between these neurons will be

slightly strengthened (Hebb, 1949). If a particular stimulus is seen over and over again, this subset

of neurons will become a functional unit, primed to respond en masse to similar

stimuli. (More realistically, the properties of the stimulus may be represented

not by one static pattern but by the dynamic trajectory of firing patterns across

some time period (Daelli and Treves, 2010)).

The patterns of neuronal activity that

represent any one object can be thought of as attractor states – any stimulus

that occupies a nearby spot in perceptual space (e.g., that has a similar shape

or sound) will be “pulled into the attractor”, with the network state

ultimately converging on a pattern that represents that object. This

“perceptual magnet” effect can be observed in psychophysical results, which

show that discrimination between stimuli that fall within a boundary is less

than that between two stimuli that are equally distant in stimulus parameters

but that span a categorical boundary (Daelli and Treves, 2010). It is important to note that this perceptual categorisation,

especially of ambiguous stimuli, is also sensitive to context and top-down

influences (Feldman et al., 2009) – indeed, all perception

involves the comparison of bottom-up signals with top-down expectations, or

prior probabilities, so as to allow active inference of the objects in the

world that are responsible for the pattern of sensory stimulation (Friston, 2010; Gilbert and Li, 2013).

Linking the visual and auditory attributes

of an object requires yet a higher level of integration and abstraction (Kourtzi and Connor, 2011). It is driven by the statistical regularities of experience – when the

letter A is seen, it is typically accompanied by the sound ā, as in hay, or ă, as in cat. The representations of these

shapes and sounds are thus reliably contingent and can lead to the development

of a higher-level representation, incorporating both elements. The brain thus builds up cognitive representations of letters as

audiovisual objects with characteristic attributes, despite the fact that these

are essentially arbitrary pairings between symbols and sounds, determined

purely by convention (Blomert and Froyen, 2010).

These processes reinforce each other. Though

spoken language is learned much earlier and much more easily than written

language, learning to read nevertheless increases the ability to distinguish

between phonemes (which are not the natural basic units of speech) (Blomert and Froyen, 2010; Dehaene et al., 2010).

Conversely, phonetic representations are involved in strengthening categorical

representations of graphemes (Brem et al., 2010). The

process of forming these associations is quite protracted, taking years to

reach a level of perceptual expertise that is effectively automatic, as

demonstrated by cross-modal mismatch negativity signals (Blomert, 2010). The development of such automaticity is lacking in dyslexia,

presumably contributing to the fact that reading remains effortful despite

extensive training.

In this model of schema formation, any areas

that are reliably co-activated will be incorporated into the schema of an

object, as supramodal areas monitor patterns of co-activation across many lower

areas and represent the statistical regularities of such contingencies. This

leads to a model of synaesthesia whereby innate differences in brain wiring

produce internally generated percepts, which, over time, are incorporated

through the normal processes of cross-modal learning into the schemas of the

inducing objects.

A

model unifying innate differences with learning processes

This model of associative synaesthesia

requires in the first place some intrinsic cross-activation of additional areas

not normally activated by particular objects (an innate difference between

synaesthetes and non-synaesthetes). For letters, this might be an area

representing colour, for example (but the idea can be readily extended to other

forms). If such an area is topographically interconnected with say the grapheme

area, so that nearby neurons in the first area project to nearby neurons in the

second area (Bargary and Mitchell, 2008), then activation of the pattern of neuronal firing that represents

any particular letter will necessarily cross-activate some (arbitrary) pattern

of neuronal firing in the colour area (Brouwer and Heeger, 2009; Li et al., 2014).

Given that the colour areas mature much

earlier than the grapheme area (Batardiere et al., 2002; Bourne and Rosa, 2006; Dehaene and Cohen, 2007), such

patterns will likely evolve towards a set of attractor states that already

represent specific colours (Brouwer and Heeger, 2009; Li et al., 2014). This

may or may not lead to a conscious and vivid percept of colour, but should at

least lead to the activation of the concept of a colour. Over time, with

extensive repetition, this internally generated sensory property will come to

be incorporated into the schema of that letter, becoming as much a part of the

concept of the letter as its shape(s) and sound(s).

In this model, without any other

influences, the particular pairings that emerge would be expected to be largely

arbitrary – dependent on the particular cross-connectivity at the anatomical

level. Such a model could explain observed second-order trends, whereby

similarly shaped letters tend to have similar colours within individual

synaesthetes, even though these colours differ across synaesthetes (Watson et al., 2012). The letters E and F, for example, are often similarly coloured,

which would be expected from an arbitrary topographic mapping between areas

representing shape space and colour space (Brang et al., 2011).

In addition, one can imagine how the

pairings might be influenced by characteristics that affect the types of neural patterns representing

specific letters or colours (Chiou and Rich, 2014; Deroy and Spence,

2013a; Ward et al., 2006; Watson et al., 2012). For

example, there could be some correspondences between the states representing

higher frequency letters and those representing higher intensity colours (such

as the proportion of neurons within the area that are recruited to the

representational pattern or some dynamical property of the pattern), which

would make pairings between members of those two types more likely to emerge. While

speculative, this kind of scenario may provide an explanation of cross-sensory

trends that do not rely on semantic information but reflect some currently

unknown representational parameters that hold across modalities.

As with the pairings between graphemes and

phonemes that emerge during learning to read, synaesthetic pairings also take a

long to coalesce. Simner and colleagues found in a longitudinal study of

children an increase in both the number and stability of synaesthetic

correspondences between letters and colours at age 7/8 compared to age 6/7 (Simner et al., 2009) and more again at age 10/11 (Simner and Bain, 2013).

Importantly for this model, this protracted

period of consolidation leaves the opportunity for semantic associations to

influence the ultimate outcome, in the same way that they do when learning the

attributes of any object. Such influences could explain the observed trends in

specific pairings mentioned above. For example, when a synaesthete is learning

the letter B, and their colour area is being cross-activated in some arbitrary

pattern, the semantic relationship between B and “blue” may prime the neuronal

pattern representing blue, making it more likely for the network to move toward

that attractor state, which will in turn be reinforced by each such

co-activation. This can be interpreted in a Bayesian context as top-down

signals conveying a prior probability of “blue”, in the context of the letter

B, which, to a greater or lesser extent across individuals, will tend to

over-ride the bottom-up sensory information, biasing the incorporation of a

consistent association with this colour into the emerging schema of the letter.

It is not difficult to see how such semantic influences could act in other

kinds of synaesthesia – for example, if the numbers 1 to 12 are regularly seen

in clock face arrangement, that may influence the spatial pattern that emerges

in an individual’s number line. (It will be interesting to see whether this

trend changes as clock faces become rarer).

In this way, the particular synaesthetic

associations that emerge through this protracted process can be biased by

top-down semantic processes, without being entirely determined by them,

reflecting observed trends rather than rules of associations. Fundamentally,

this means that while such semantic processes may affect the outcome, they are

not the prime drivers of the phenomenon of synaesthesia. The condition of

synaesthesia is thus not caused by

learning, and there is certainly no reason to think of it as having a purpose in facilitating learning. It may

have that effect, but it is a conceptual mistake to interpret that as a reason

for its existence.

The difference that makes a difference, in

determining why some people get synaesthesia and others do not, is genetic

(clearly so in many cases and likely so in others). The model of

cross-activation at some level of the perceptual hierarchy remains a

parsimonious one for the mechanism through which these genetic differences

mediate their primary effects (Bargary and Mitchell, 2008; Hubbard et al., 2011), and

has at least general support from many neuroimaging studies (Rouw et al., 2011). Whether this involves primary changes in structural or functional

connectivity remains an open question, but in either case, the outcome is an

altered sensory experience, with some internally generated percept that gets

assimilated into the schemas of the inducing objects through normal processes

of multisensory learning.

This model is subtly but importantly

different from ones that explain synaesthesia on the basis of only an acute

difference between the brains of synaesthetes and non-synaesthetes. For some

synaesthetes, such as audiovisual synaesthetes for whom new sounds induce a

vivid visual percept, there may – perhaps must – be some ongoing sensory

cross-talk. But for associators, their synaesthetic experiences may arise not

because their brain wiring is

slightly different, but because that difference existed over development, while

the person was learning various categories of objects. An interesting

possibility that may link the phenomenologically distinct forms of projector

and associator synaesthesia is that an early state of vivid synaesthesia, which

may fade in conscious experience over time (as reported at least anecdotally by

some adults), could nevertheless lead to long-lasting conceptual associations

if it were present during this learning period.

The theoretical framework presented here is

consistent with both an innate difference as the fundamental driver of the

condition of synaesthesia, and with semantic and experiential influences on the

eventual phenotype that emerges. In particular, it proposes that the internally

generated synaesthetic percepts are treated similar to other sensory

information as the brain is learning the sensory attributes of objects and

developing schemas to conceptually link them.

References

This is great information explaining how the brain is working. Are there any researchers in the practical application for teachers and parents? I have yet to find anyone that can help with my 13 -year-old.

ReplyDelete